Insights for Transformation.

Stop following the news. Start architecting the future. These are the proprietary production patterns, AI-native frameworks, and lightning strikes we use to transform ideas into Category Kings.

Stop following the news. Start architecting the future. These are the proprietary production patterns, AI-native frameworks, and lightning strikes we use to transform ideas into Category Kings.

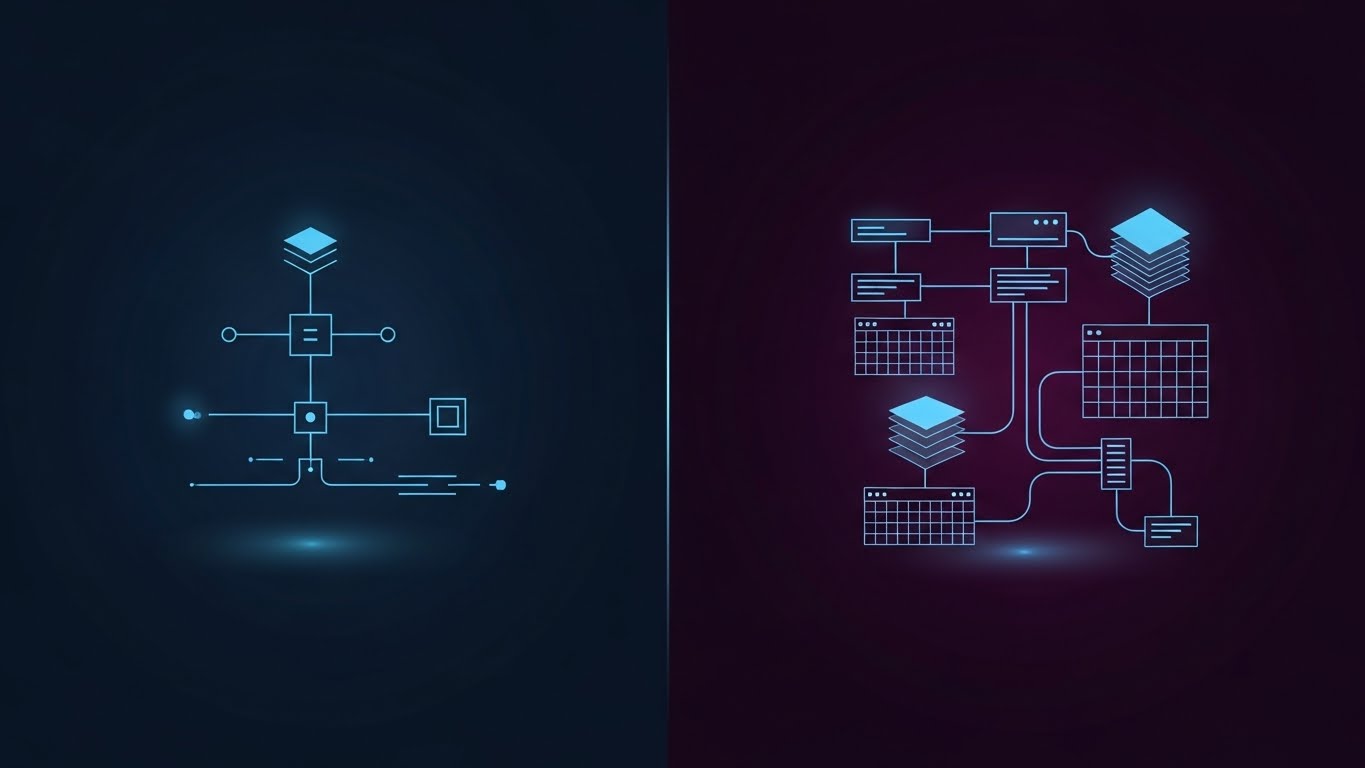

AI coding tools are no longer just smarter autocomplete engines. They now span a wide spectrum, from IDE-native assistants that suggest code inline to agentic systems capable of planning, modifying, and executing changes across an entire repository. Because of that shift, choosing the right tool depends less on feature lists and far more on the stage of the company using it. What accelerates a startup can easily slow down or destabilize a scale-up.

AI tools have moved from standalone chat interfaces into the tools people use every day. One of the most interesting examples of this shift is Comet, an AI-native web browser designed to blend browsing, search, and task execution into a single experience.This article explains what Comet is, how it works, what makes it different from traditional browsers, and where it fits realistically in modern workflows. The goal is clarity, not hype.

AI coding agents in 2026 are no longer just autocomplete tools. They plan changes, reason across repositories, and in some cases execute tasks end to end. For engineering teams, the real question is not which agent is the smartest, but which one fits how your team builds, reviews, and ships software. This guide compares the leading AI coding agents through a team-first lens, focusing on autonomy, control, and practical adoption.