Choosing an AI Coding Tool for Startups vs Scale-Ups

Why company stage changes everything

Startups and scale-ups optimize for fundamentally different things.

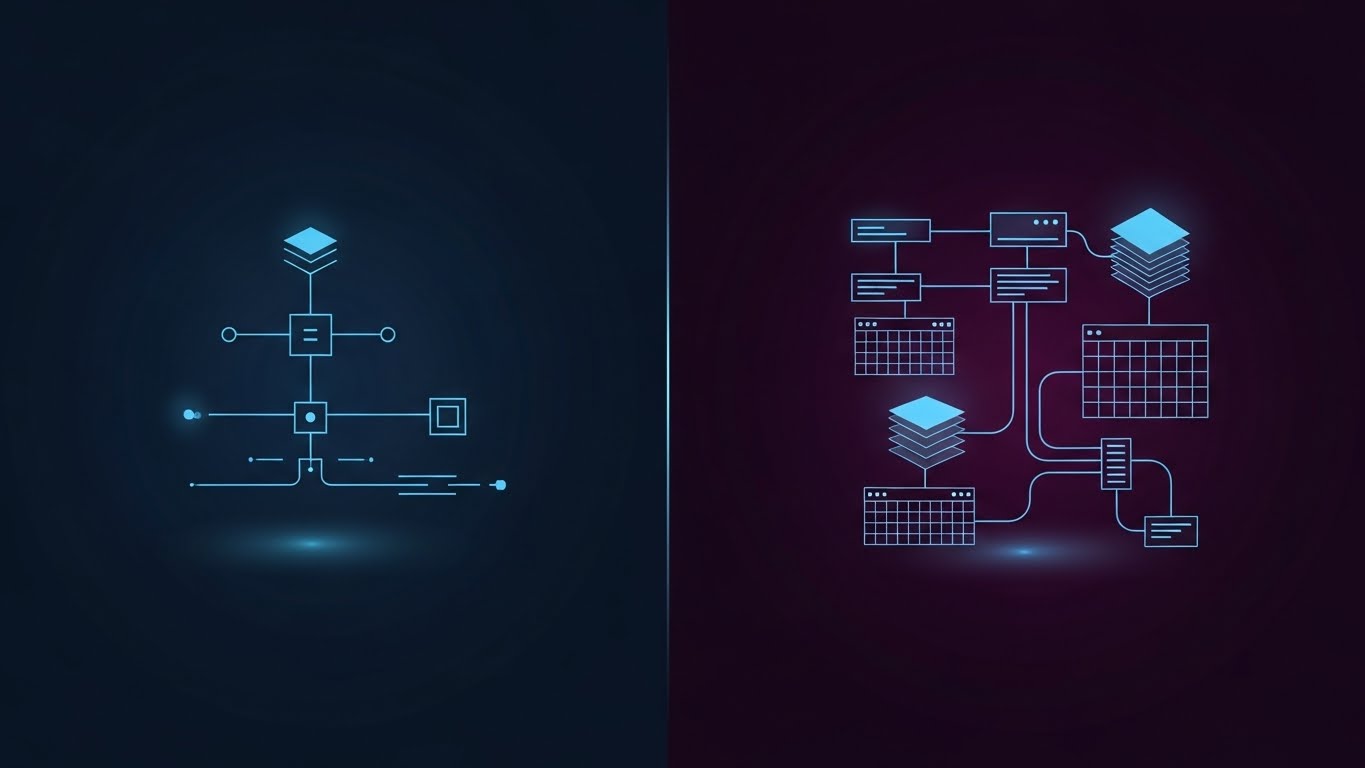

Early-stage teams are trying to learn fast. Codebases are small, architecture is still fluid, and most developers carry a large portion of the system in their heads. Speed and adaptability matter more than strict correctness.

Scale-ups face the opposite reality. Codebases are larger, ownership is distributed, and changes tend to ripple across multiple systems. Here, reliability, coordination, and predictability matter more than raw typing speed.

An AI coding tool amplifies whatever environment it is placed into. That is why context matters more than capability.

How startups should think about AI coding tools

In startups, the main bottleneck is usually not reasoning across thousands of files. It is momentum. Developers want to move quickly, test ideas, and discard work without heavy process overhead.

IDE-native AI tools tend to fit this phase well because they stay close to the developer’s intent. They assist with writing and understanding code without taking control away. The developer remains the decision-maker at every step, which keeps mistakes visible and easy to correct.

This matters because early-stage code changes are frequent and experimental. When something breaks, the feedback loop is short. A tool that behaves predictably and stays within the IDE supports this rhythm rather than disrupting it.

The most common mistake startups make is adopting highly autonomous, agentic tools too early. When an AI starts modifying multiple files or making architectural decisions on its own, debugging becomes harder. In a small team, that hidden complexity can quickly outweigh any time saved.

What changes as teams scale

As a company grows, the nature of work shifts. Developers spend less time writing new features from scratch and more time navigating existing systems, updating dependencies, refactoring legacy code, and coordinating changes across teams.

At this stage, the pain is no longer about typing speed. It is about cognitive load and effort avoidance. Large refactors get postponed because they are tedious and risky. Maintenance tasks pile up because they are time-consuming and unglamorous.

This is where agentic AI tools begin to show real value.

When tasks are well-defined but expensive to execute manually, an autonomous assistant can act as leverage. It can perform repetitive, cross-cutting changes that humans would rather not touch, while engineers focus on review and validation instead of execution.

When autonomy becomes an advantage instead of a risk

Agentic tools work best in environments that already have structure. Clear task definitions, strong code review practices, and ownership boundaries are essential. Without them, autonomy turns into uncertainty.

In a mature setup, however, the same autonomy becomes an asset. Large dependency upgrades, systematic refactors, test generation across modules, and migration work are all areas where agentic tools can reduce friction significantly.

The key difference is that scale-ups can absorb mistakes better. They have processes designed to catch issues before they reach production. Autonomy is no longer uncontrolled. It is constrained and supervised.

A more useful way to decide

Instead of asking which AI coding tool is best, teams should ask what kind of risk they are equipped to manage.

If most of the codebase still fits comfortably in a developer’s head, heavy autonomy is unnecessary. If large changes are routinely delayed because they are painful to execute, autonomy may help. If code review is informal or inconsistent, agentic tools will introduce more problems than they solve.

The tool should match the team’s capacity for oversight, not its appetite for novelty.

The hybrid reality most teams arrive at

In practice, many organizations do not choose one category exclusively. They evolve into a hybrid model.

IDE-native assistants remain part of daily development, helping with local reasoning and fast iteration. Agentic tools are reserved for scoped tasks where autonomy creates leverage and the cost of review is justified.

This balance avoids the false choice between speed and safety.

The final perspective is that there is no universally correct AI coding tool.

Startups gain the most from assistants that stay close to the developer and reduce friction without introducing hidden complexity. Scale-ups unlock value from autonomy only when it is paired with strong review, clear ownership, and disciplined workflows.

The right choice is not about how powerful the AI is. It is about how much uncertainty your team can safely absorb.